Azure SQL Database is a very flexible service that can be easily scaled to fit the needs of the moment. In most solutions, I like to orchestrate the up and down scaling right from within Azure Data Factory, and I’m always interested in more efficient approaches to do this.

<— Update 4-7-2019:

I’ve learned from a colleague (thanks Roland

SELECT DATABASEPROPERTYEX(db_name(),'edition') AS ServiceTier , DATABASEPROPERTYEX(db_name(),'serviceobjective') AS ComputeSize

—>

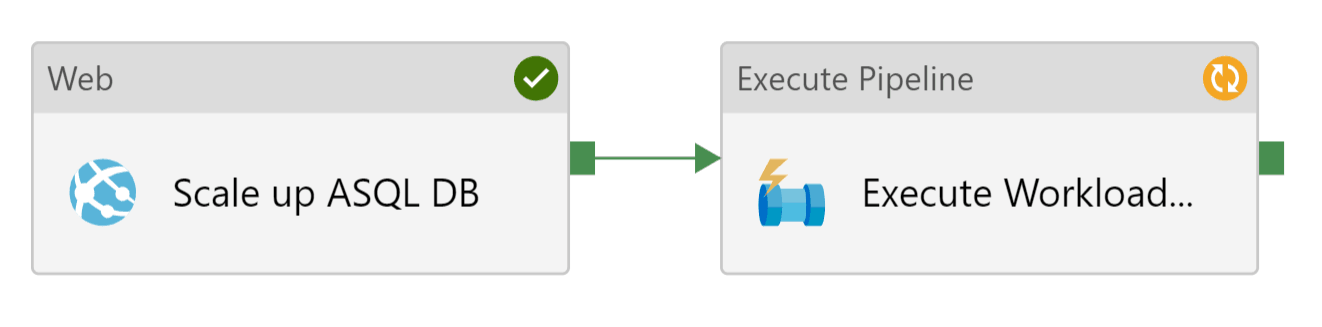

Until today, to scale up an Azure SQL Database before a particular workload in Azure Data Factory, I had quite some options to choose from to achieve this:

- Create a LogicApp, and trigger it with a Web Activity in Azure Data Factory.

- Create an Azure Function, and invoke the function using the new Azure Function Activity in Azure Data Factory.

- Create an Azure Automation workbook, add a webhook to it and call that with a Web Activity in Azure Data Factory.

- Create a T-SQL script with an ALTER DATABASE statement and call that with a Stored Procedure Activity in Azure Data Factory.

New kid in town (well, at least for me)

But today, my dear colleague Simon Zeinstra pointed out that the Azure Data Factory Web Activity supports managed identity (MSI) authentication, thanks

The Web Activity seems to call the REST API synchronously, hence it does not finish before the scale operation is completed.

Configuration

The following configuration for the Web Activity is what worked in my set up.

- URL: Use the following URL, where you replace the stuff between {brackets}. Be sure to specify the Azure SQL Server without the “.database.windows.net” part.

https://management.azure.com/subscriptions/{your SubscriptionID}/resourceGroups/{your RG}/providers/Microsoft.Sql/servers/{your sqlservername}/databases/{your databasename}?api-version=2017-10-01-preview - Methode:

PUT - Headers: Add a header with name

Content-Type, and valueapplication/json. - Body: Use the following json body, where you specify the sku name and tier that you want your database to be scaled to, and the region of the database:

{"sku":{"name":"P1","tier":"Premium"}, "location": "West Europe"} - Authentication:

MSI - Resource:

https://management.azure.com/

Authorisation

If you have completed your configuration and debug the Web Activity, you may get this error message:

{

"errorCode": "2108",

"message": "{\"error\":{\"code\":\"AuthorizationFailed\",\"message\":\"The client '{GUID}' with object id '{GUID}' does not have authorization to perform action 'Microsoft.Sql/servers/databases/write' over scope '/subscriptions/{subid}/resourceGroups/{rgid}/providers/Microsoft.Sql/servers/{sqlservername}/databases/{dbname}'.\"}}",

"failureType": "UserError",

"target": "Scale up ASQL DB"

}

This means the managed identity (MSI) of our Azure Data Factory does not yet have the correct permissions on our Azure SQL Server. Follow these steps to do this:

- In the Azure Portal, go to the SQL Server.

- Open the ‘Access control (IAM)’ option.

- Click ‘Add role assignment’.

- Choose role ‘Contributor’.

- In the search box for ‘Select’, type the exact name of your Azure Data Factory resource, and select it.

- Click Save.

Now the managed identity (MSI) of your Azure Data Factory is able to change the options of your database!

Quickstart ADF pipeline example

For your convenience, I’ve shared an example Azure Data Factory pipeline on GitHub.

| { | |

| "name": "ADF_Scale_Azure_SQLDB", | |

| "properties": { | |

| "activities": [ | |

| { | |

| "name": "ADF_Scale_Azure_SQLDB", | |

| "type": "WebActivity", | |

| "policy": { | |

| "timeout": "7.00:00:00", | |

| "retry": 0, | |

| "retryIntervalInSeconds": 30, | |

| "secureOutput": false, | |

| "secureInput": false | |

| }, | |

| "typeProperties": { | |

| "url": { | |

| "value": "@concat('https://management.azure.com/subscriptions/', pipeline().parameters.SubscriptionID, '/resourceGroups/', pipeline().parameters.ResourceGroupName, '/providers/Microsoft.Sql/servers/',pipeline().parameters.AzureSQLServerName,'/databases/',pipeline().parameters.AzureSQLDatabaseName,'?api-version=2017-10-01-preview')", | |

| "type": "Expression" | |

| }, | |

| "method": "PUT", | |

| "headers": { | |

| "Content-Type": "application/json" | |

| }, | |

| "body": { | |

| "value": "@json(concat('{\"sku\":{\"name\":\"', pipeline().parameters.ComputeSize, '\",\"tier\":\"', pipeline().parameters.ServiceTier, '\"}, \"location\": \"', pipeline().parameters.AzureRegionName, ' \"}' ) )", | |

| "type": "Expression" | |

| }, | |

| "authentication": { | |

| "type": "MSI", | |

| "resource": "https://management.azure.com/" | |

| } | |

| } | |

| } | |

| ], | |

| "parameters": { | |

| "ServiceTier": { | |

| "type": "String", | |

| "defaultValue": "Standard" | |

| }, | |

| "ComputeSize": { | |

| "type": "String", | |

| "defaultValue": "S0" | |

| }, | |

| "AzureSQLServerName": { | |

| "type": "String", | |

| "defaultValue": "<specifyDefaultSQLServer>" | |

| }, | |

| "AzureSQLDatabaseName": { | |

| "type": "String", | |

| "defaultValue": "<specifyDefaultSQLDB>" | |

| }, | |

| "SubscriptionID": { | |

| "type": "String", | |

| "defaultValue": "<specifyDefaultSubscriptionID>" | |

| }, | |

| "ResourceGroupName": { | |

| "type": "String", | |

| "defaultValue": "<specifyDefaultRG>" | |

| }, | |

| "AzureRegionName": { | |

| "type": "String", | |

| "defaultValue": "West Europe" | |

| } | |

| } | |

| } | |

| } |

Conclusion

You now know how to effortlessly use a Web Activity in Azure Data Factory to scale your Azure SQL Database.

The ease-of-use of this solution is awesome, and largely due to how the authentication between Azure Data Factory and Azure SQL is taken care of via managed identity (MSI). Keeping any credentials out of my code, and I don’t even have to use Azure Key Vault to accomplish this.

The other options I mentioned like LogicApps, Functions and Runbooks sure provide more features and options. Time will tell if this simple option is going to be my new default, but it sure seems like it

More info:

- https://docs.microsoft.com/en-us/azure/data-factory/control-flow-web-activity

- https://docs.microsoft.com/en-us/azure/sql-database/sql-database-single-database-scale

- https://docs.microsoft.com/en-us/azure/active-directory/managed-identities-azure-resources/overview

- https://docs.microsoft.com/en-us/azure/active-directory/managed-identities-azure-resources/services-support-msi

- https://docs.microsoft.com/en-us/azure/sql-database/sql-database-service-tiers-dtu

by

by

Will the same work for AAS?

Hi Derek, yes this also works for AAS!

I’ve shared the json of an example Azure Data Factory pipeline on GitHub on this link: https://gist.github.com/DaveRuijter/7daeacc3fc13ccce22739e634ec826ab.

Don’t forget to add your Azure Data Factory with a Contributor role to your Azure Analysis Services server!

Hi Dave,

first of all, thanks for sharing this!

I implemented your example regarding the AAS, and it works.

https://gist.github.com/DaveRuijter/7daeacc3fc13ccce22739e634ec826ab

The only issue that we are running into is that the identities that we have defined to be Analysis Services Admins are removed when the df pipeline runs.

Hi Dave!

I just fired this up for a project I’m working on and it’s pretty fantastic and simple. Thank you for sharing and the documentation/notes/JSON code. Much appreciated!

Thanks again!

Glad you like it! That’s great to hear.

I get error The client xxx with object id xxx does not have authorization to perform action ‘Microsoft.Sql/servers/databases/write’

I HAVE inserted the datafactory as Contributor as instructed (Even tried owner). Has something changed explaining that this does not work? Or do I miss some step? Also I have double checked the other parameters.

I must comment that this was my error. I used FQDN server name and it should be the short one.

Hi Sam,

Great you got it solved! Indeed it should be the short name of the server, without the rest of the ‘URL’ of the server name.

Hi,

works very nice for scaling between basic, standard and premium but I’m having trouble scaling up vcore. I would like to scale to Gen4 – General Purpose (GP_Gen4_4) (inlcuding the azure hybrid benefit saving).

What would the json body be for that

Hi! Great question, thanks for asking. In your case the ServiceTier would be ‘GeneralPurpose’ and the ComputeSize would be ‘GP_Gen4_4’. )

)

I’ve learned from a colleague that you can find the correct values with the SQL query below, after you have manually set the database to the desired tier and size (thanks Roland!

SELECT DATABASEPROPERTYEX(db_name(),'edition') AS ServiceTier , DATABASEPROPERTYEX(db_name(),'serviceobjective') AS ComputeSizeI’ll update my blog post to note this.

hi, thanks for the reply. what I’m really interested to know is how to include the licence hybrid benifit saving.

https://docs.microsoft.com/en-gb/rest/api/sql/databases/update#databaselicensetype

so maybe the json would look like this…but I cant find the values to put

{“sku”:

{“name”:”GP_Gen4_4″,”tier”:”GeneralPurpose”}

,”licenseType”:

{“BasePrice”:”??”,”LicenseIncluded “:”??”}

,”location”:”West Europe”

}

It’s been a while, have you found the solution? If so, please feel free to share it!

Hi,

nice article! Have you tried this with an azure sql managed instance? i can successfully call the get method from the api, but on calling put/patch i get an unauthorized error – the downscaling however worked… upscaling (with the same parameterized activity) didn’t work…

Regards,

tim

Hi Tim, I have not tried this on a Managed Instance.

Hi Dave,

I’ve got it working:

For the managed instance, you have to add the data factory as contributor to the resource group!

Regards,

Tim

Thanks Dave, you saved my day!

Awesome! Glad to hear.

Not able to authorize, can you please share what would be the the authorization header. Do we need to get access token or what i need to provide in authorization header

Please double check the Authentication type.. is it MSI?

I am getting this in response

{“error”:{“code”:”AuthenticationFailed”,”message”:”Authentication failed. The ‘Authorization’ header is missing.”}

Hi!

Great tip! Will this work when using basic authentication instead of managed identity?

I don’t think so. It might also work with some form of oauth token authentication. But basic, no.

Thanks for your post.

I want to scale Azure SQL database DTUs. Your post doesn’t mention any method to change DTUs for a specific Edition. For example what if I want to change DTUs to 200/800 for Edition “S4” Is this possible? Please Suggest..

Got it, Thanks

How did you fix this?

You can change the tier to get more DTUs. You can’t set the DTU level.

It worked fine when i ran in test / debug mode but when i moved it to production/datafactory, it failed with below message. It looks like URL API version is in preview which you used ?api-version=2017-10-01-preview

Is there a GA version out?

Operation on target dbscale failed: {“error”:{“code”:”InvalidRequestContent”,”message”:”The request content was invalid and could not be deserialized:

‘Could not find member ‘datasets’ on object of type ‘ResourceDefinition’. Path ‘datasets’.’.”}}

The API version is no issue, you can use the preview version.

There must be something else going wrong.

Can you explain more about when this error comes up?

Hi dave,

I am bumping into the same issue, in debug mode it works like a charm but when published and executed via a trigger I get the following error:

Operation on target dbscale failed: {“error”:{“code”:”InvalidRequestContent”,”message”:”The request content was invalid and could not be deserialized:

‘Could not find member ‘datasets’ on object of type ‘ResourceDefinition’. Path ‘datasets’.’.”}}

I can’t find any documentation regarding this error, do you have any idea?

Kind regards,

Marc

Hi Marc, do you have more information on the issue? When does it happen, what is the input for the web activity, what is the output, those kinds of things.. Maybe it provides a hint of what is going wrong..

Can we do the same for Azure Sql POOL

Yes, that should be possible with some minor adjustments

Hi Dave, this looked very promising when I tried it out and it worked immediately. But when I trigger the pipeline the web activity times out after 1 min. Have you seen that issue before, and maybe can you suggest a solution?

Hi Dave, this is an addition to my previous message (can’t respond to that one yet, still waiting moderation).

It now seems that sometimes the pipeline works, sometimes it fails. I can imagine that for larger databases this operation will fail more often – as Microsoft are replicating your database (which takes time depending on data volume) in a different service tier when you’re scaling the DB.

Hi Bas,

Sorry I missed your questions.

By default, the web activity in Azure Data Factory has a timeout of 1min for POST requests.

So that is what is causing this issue.

And, it seems that this POST request is run synchronous, so it waits until it completes..

Maybe you can think of a solution that is asynchronous?

Thank you sir! that worked like a charm !

Hi Dave,

I have this set up, however the higher ups at my company feel that Contributor may be too permissive. They even feel that SQL DB Contributor may still be too permissive. I’m thinking a custom RBAC role with the following permissions may work.

Read List/Get Azure SQL Database(s) “Microsoft.Sql/servers/databases/read”

Write Create/Update Azure SQL Database “Microsoft.Sql/servers/databases/write”

Other Pause a Datawarehouse database. “Microsoft.Sql/servers/databases/pause/action”

Other Resume a Datawarehouse database. “Microsoft.Sql/servers/databases/resume/action”

Read List/Get Azure SQL Server(s) “Microsoft.Sql/servers/read”

Read Gets the status of a database operation “Microsoft.Sql/servers/databases/operationResults/read”

Can you provide any feedback on this?

Always good to apply least privilege

Hi Dave,

I am getting the below error message. Can you please help me out?

{“error”:{“code”:”ParentResourceNotFound”,”message”:”Can not perform requested operation on nested resource. Parent resource ‘xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx’ not found.”}}