I got a few questions lately on how to integrate the refresh of a Power BI dataset in the orchestration that Azure Data Factory offers. For instance to process your Power BI model directly after you have loaded new data in the data lake or the datawarehouse database. Effectively ‘daisy-chaining’ these activities. This absolutely makes sense, but can be quite tricky to set-up.

Solution requirements

Part of the trickiness is due to the fact that I not only want a clean and easy solution, but I’ve also got to be able to put this solution in production. So, I’ve got the following list of requirements:

- Integrate in Azure Data Factory, for reasons stated above

- Easy deployment using Azure DevOps release pipeline

- No personal account for authentication

- Store sensitive data (secrets/passwords) in Azure Key Vault only

- No sensitive data in logs, while executing the solution

The solution

The ‘tldr’ of this solution is to leverage the support for Service Principals in Power BI and use the Power BI REST API in a Web Activity in Azure Data Factory. Sounds easy, doesn’t it? Well, it can be a bit of challenge to set-up, but with this guide it should be easy for you from now on

Step-by-step guide

I’ll try to guide you from beginning to end with this setup, and describe all the necessary steps.

If you’re an expert, you can probably use the summary below and download the Azure Data Factory pipeline I prepared for you.

Overview of steps (details below):

- Create an AAD App Registration. Add a secret.

- Create an AAD Security Group and add the SP object

- In the Power BI tenant settings, register the Security Group for API access using Service Principals

- Create a Power BI App Workspace (only V2 is supported)

- Add the Service Principal as an admin to the Workspace

- Create an Azure Key Vault and add the secrets involved

- Create an Azure Data Factory

- Make sure Data Factory can authenticate to the Key Vault

- Create an Azure Data Factory pipeline (use my example)

- Run the pipeline and high-five the nearest person in the room

Permissions required

Make sure you can do or are the follow items, or sit next to the admins, or be prepared to file a ticket and be patient

- Power BI Tenant Administrator (or Azure Global Administrator)

- Permissions to create an AAD Security Group (Azure AAD Administrator)

- Permission to create a Power BI App Workspace (V2) (by default a Power BI Pro user can do this, but it might be disabled in your tenant)

- Permissions to create an Azure Key Vault, an Azure Data Factory, and to configure the Identity Access Management on the Key Vault (the OWNER role on an Azure Resource Group provides all of this)

Step #1 Create AAD App Registration

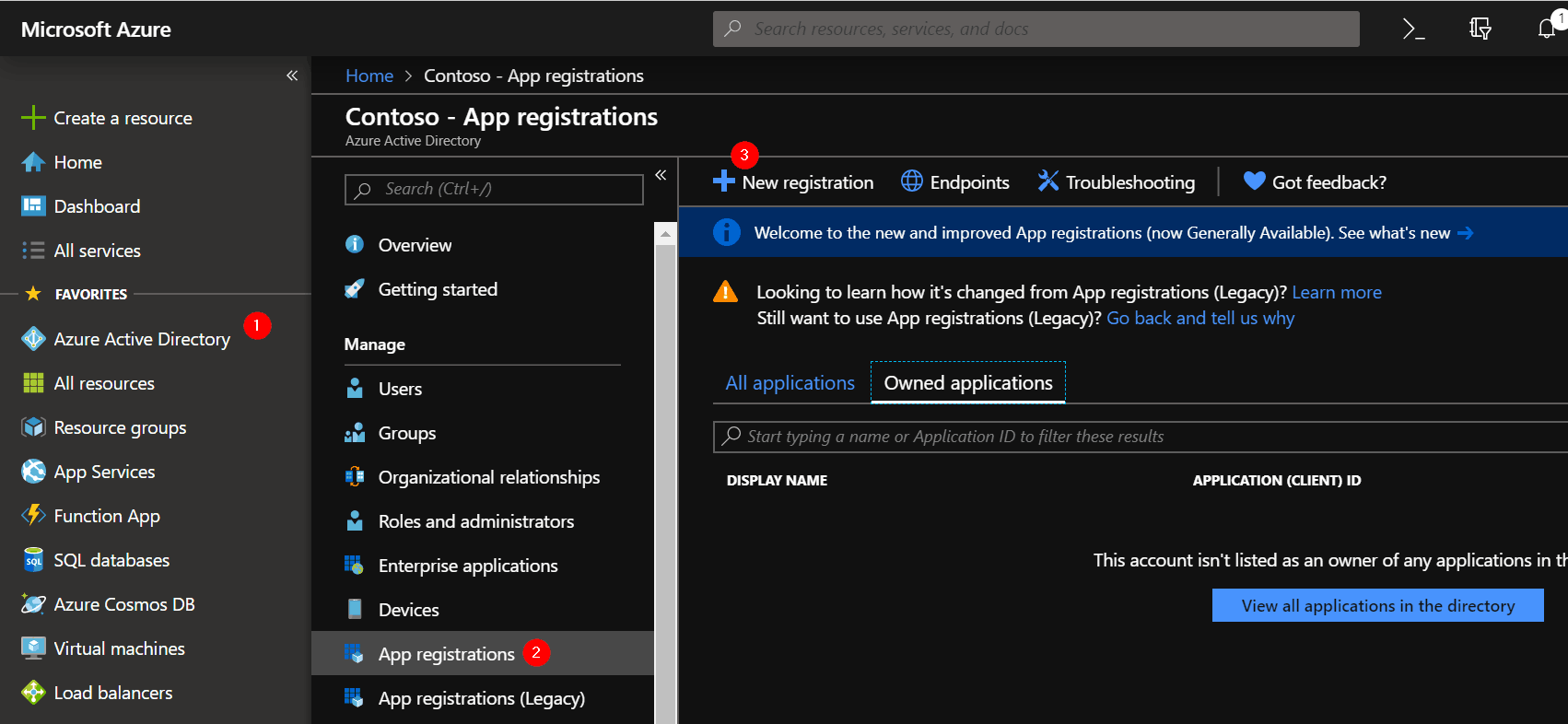

Navigate to https://portal.azure.com and open the Azure Active Directory blade. Then open the App Registrations section. Then click on ‘New registration’.

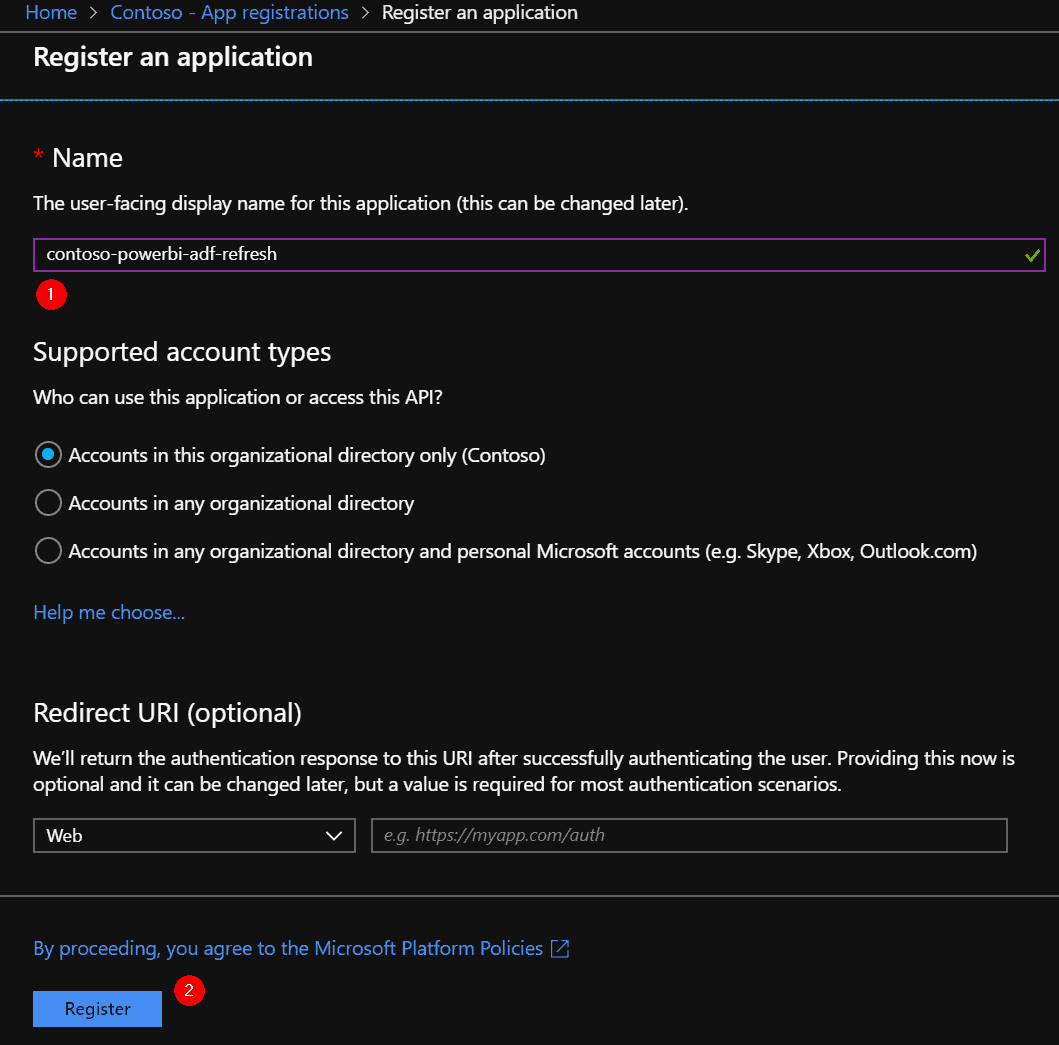

Provide a name for the application, try to make it descriptive enough to later remember what it is used for.

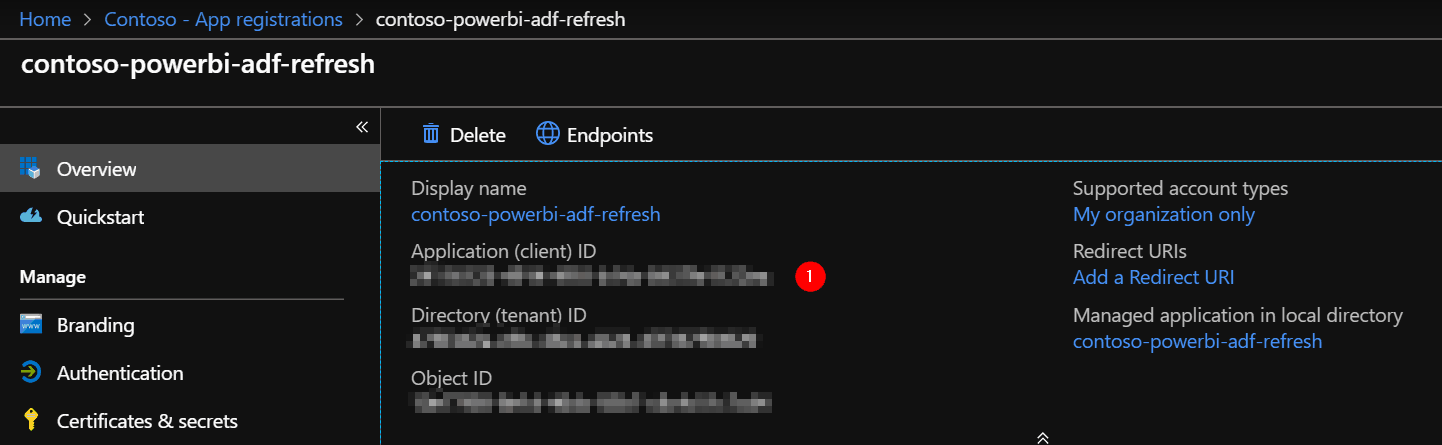

Copy the Application (client) ID value. We need this value at a later step, so temporarily save it in your favorite note editor.

Call it “ClientId”. Also copy the ‘Directory (tenant) ID’, call it “TenantId”.

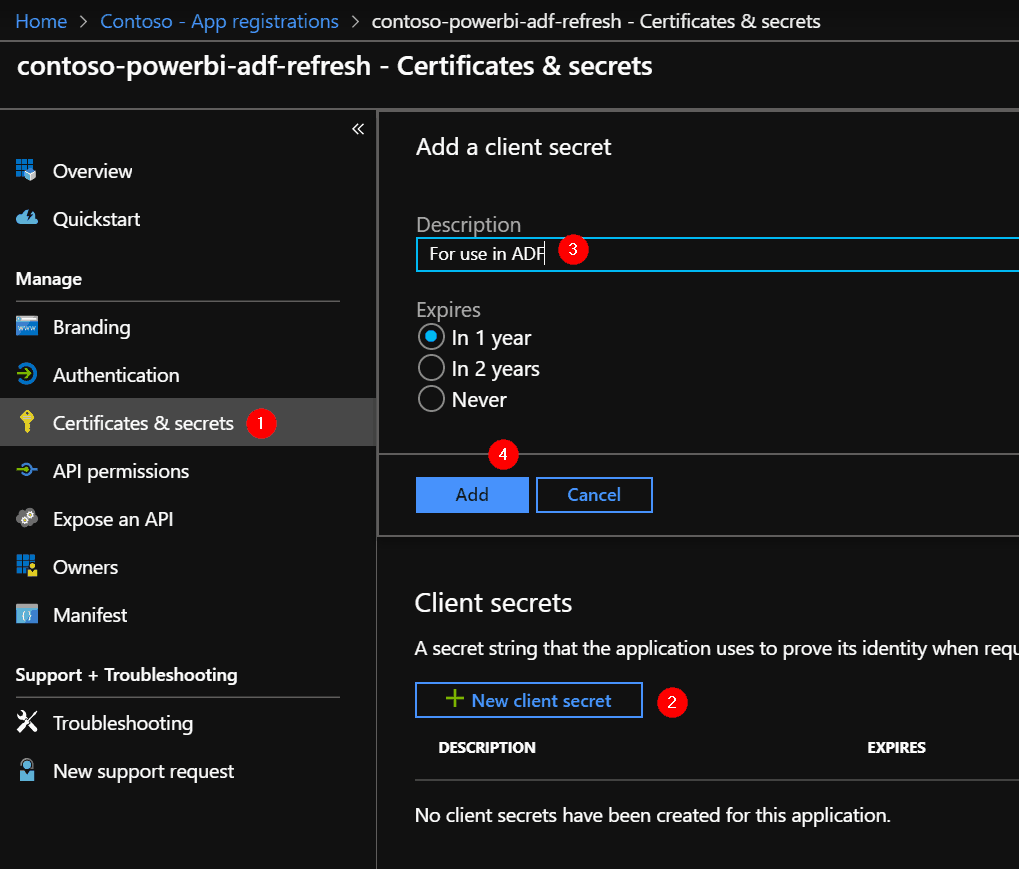

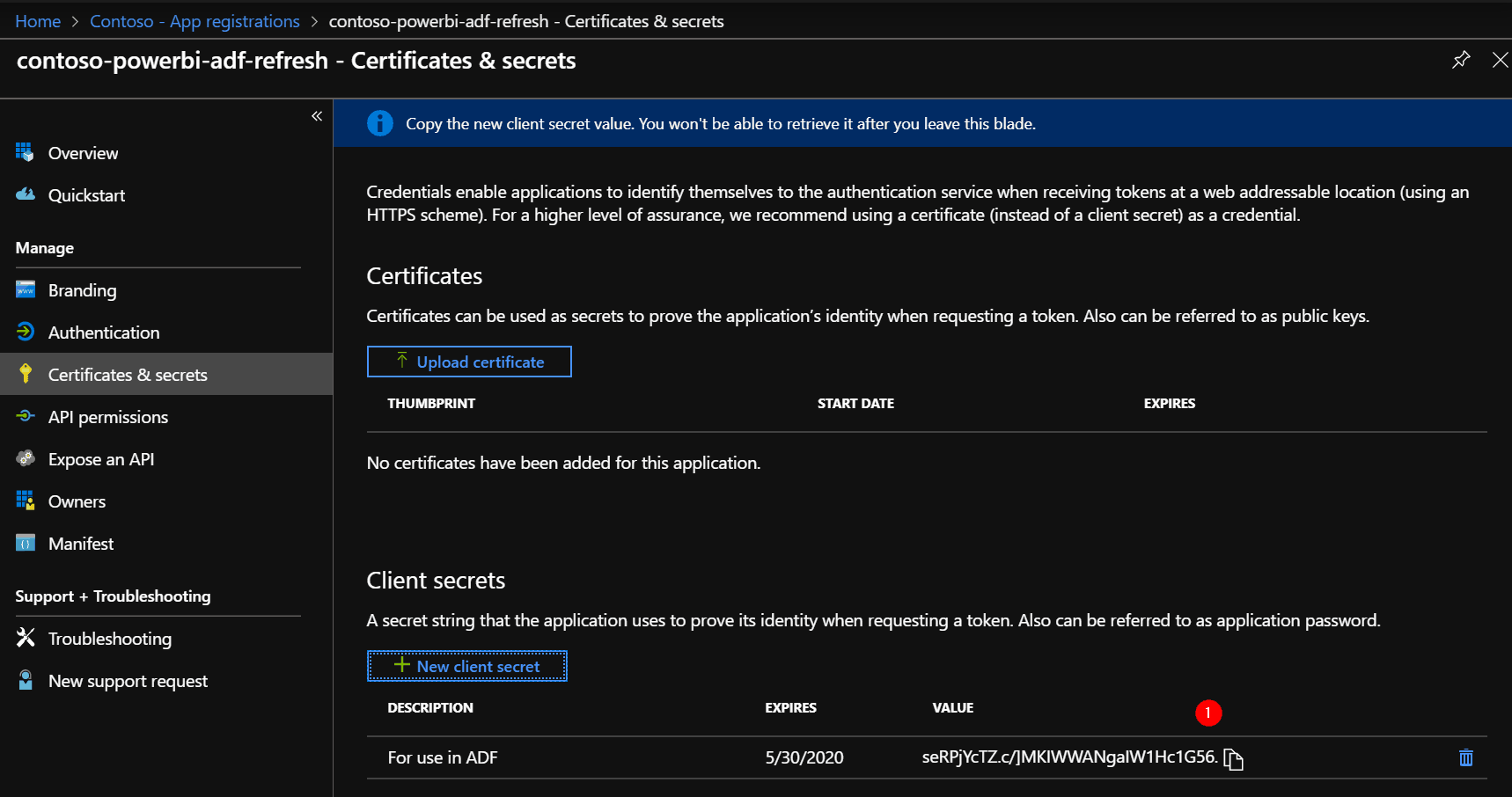

Add a secret to the application, via the ‘Certificates & secrets’ section.

Important! Make sure you copy the secret value right after you click on ‘Add’, as you won’t be able to retrieve it after you leave this page.

Save this value, call it “ClientSecret”, you need this later.

Step #2 Create AAD Security Group

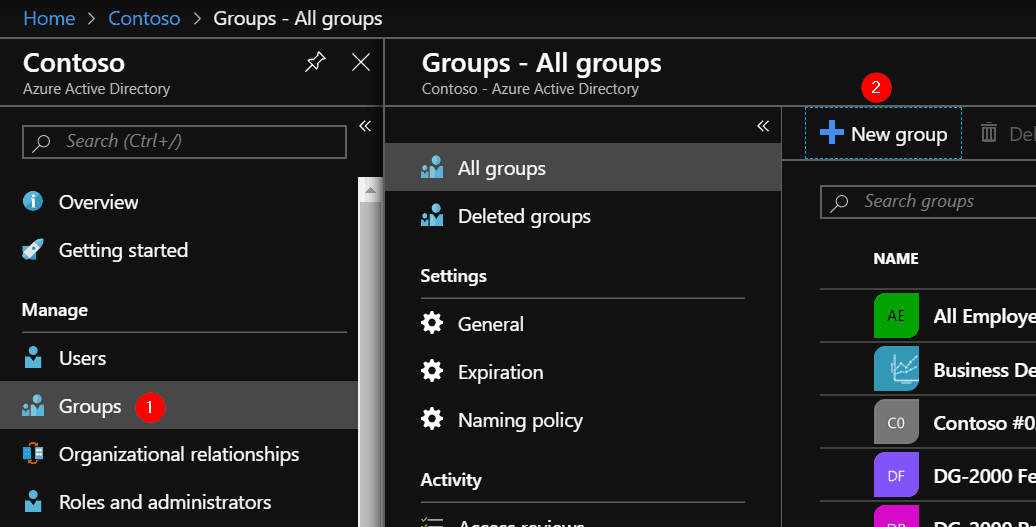

Go back to the Azure Active Directory blade and open the Groups section. Click on ‘New group’.

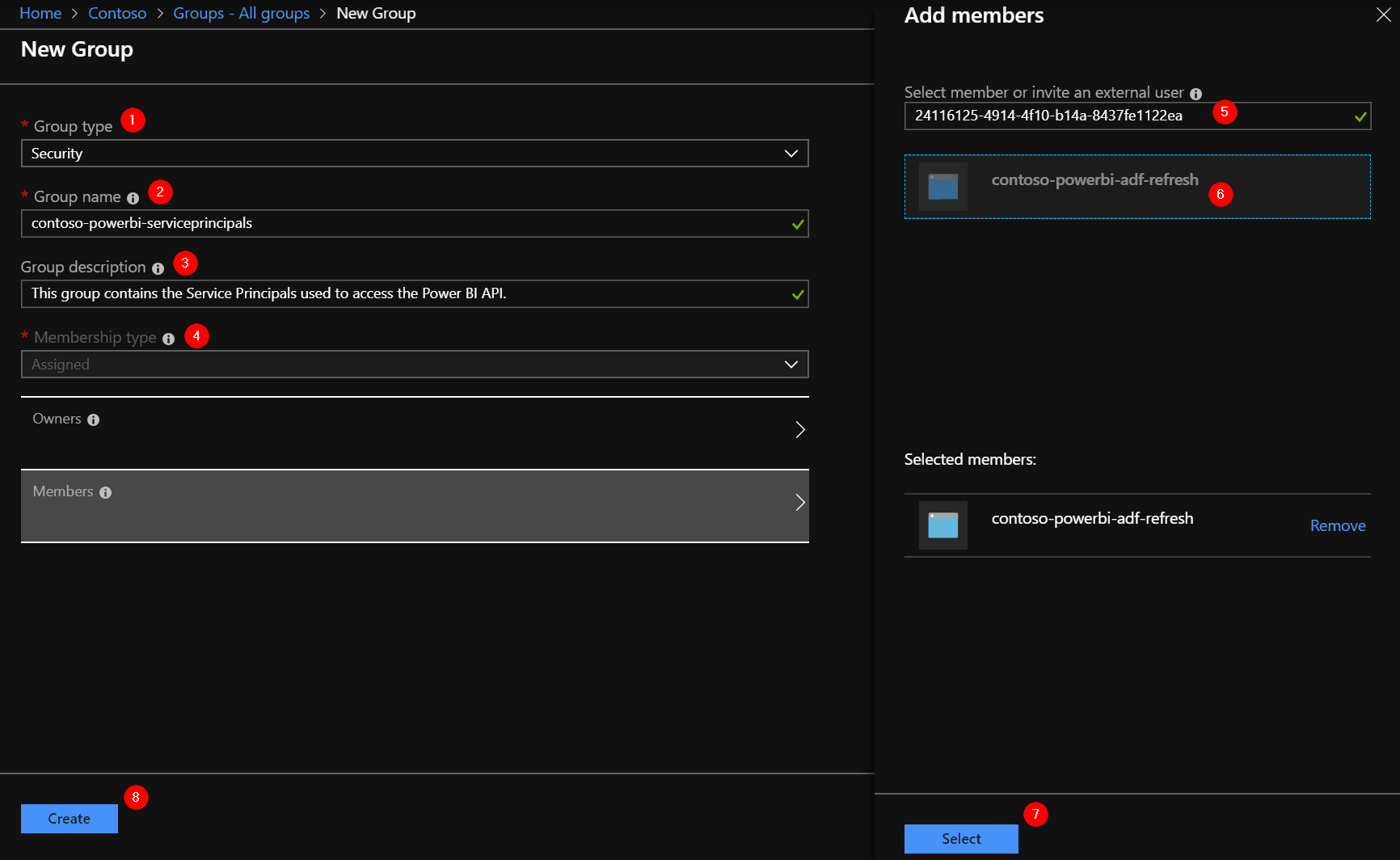

The group type is ‘Security’, give the group a decent name and description, perhaps a set of owners and most importantly: add your Service Principal as a member, by searching using the name or ClientId of the application.

Write down the name of the group in your notes, you need it later.

Step #3 Register Power BI API access for Service Principals

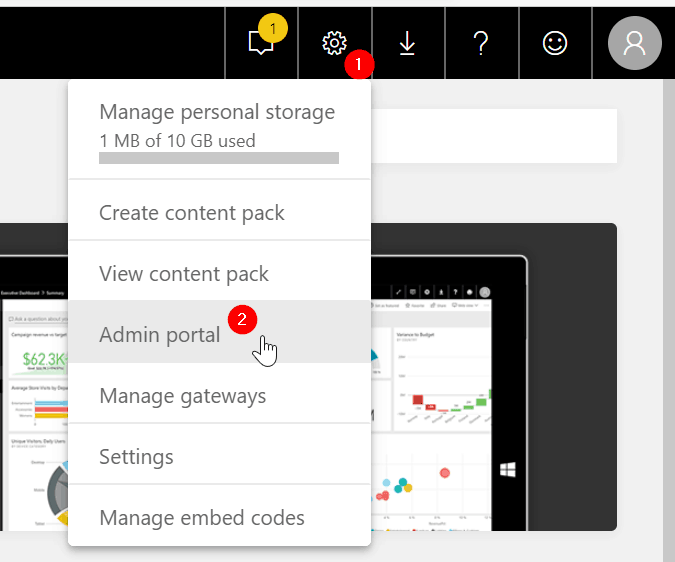

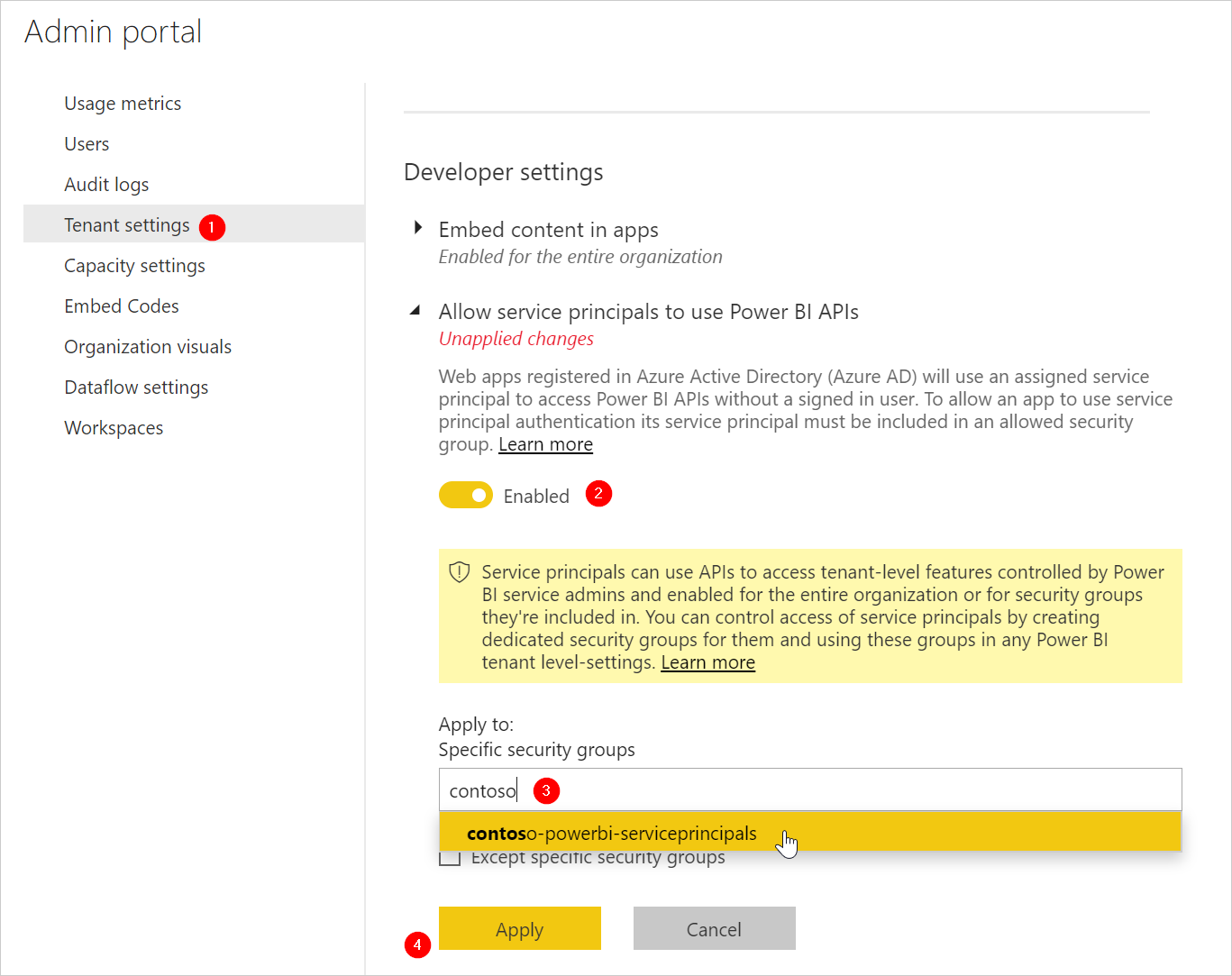

Go to the Power BI Service at https://app.powerbi.com and open the Admin Portal.

Go to the ‘Tenant Settings’ section, and scroll to the setting for ‘Allow service principals to use Power BI APIs’. Enable the setting and search for the security group you have made earlier. Click Apply when you have selected your group. This setting might take up to 15 minutes to take effect

Step #4 Create Power BI App Workspace

If you already have a Power BI App Workspace in the ‘new upgraded experience’ a.k.a. ‘V2’ style, you can use that. Otherwise, please create a new App Workspace. Note that this solution will not work with ‘classic’ App Workspaces, because they do not support Service Principal membership.

Step #5 Add Service Principal to the Workspace

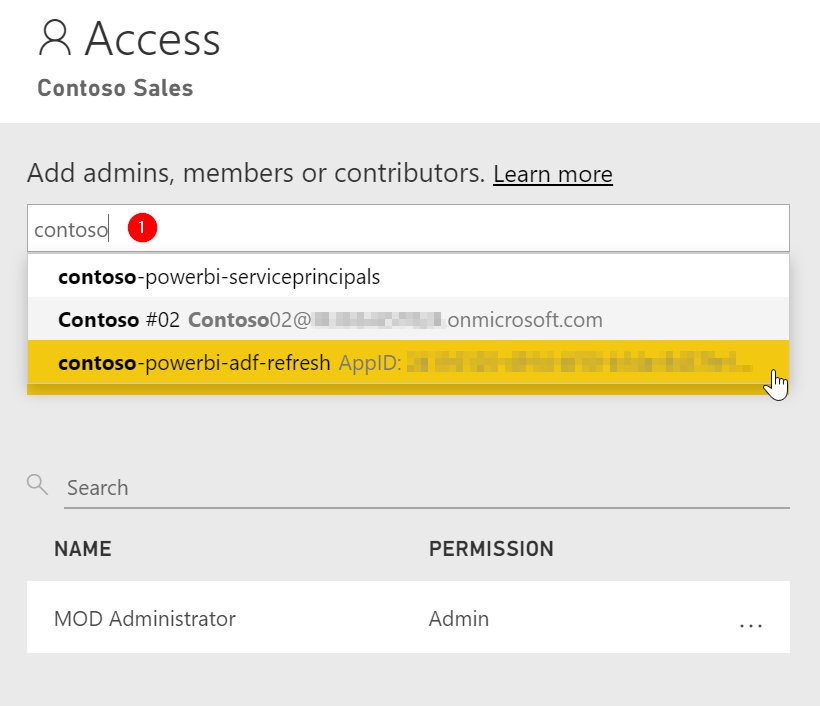

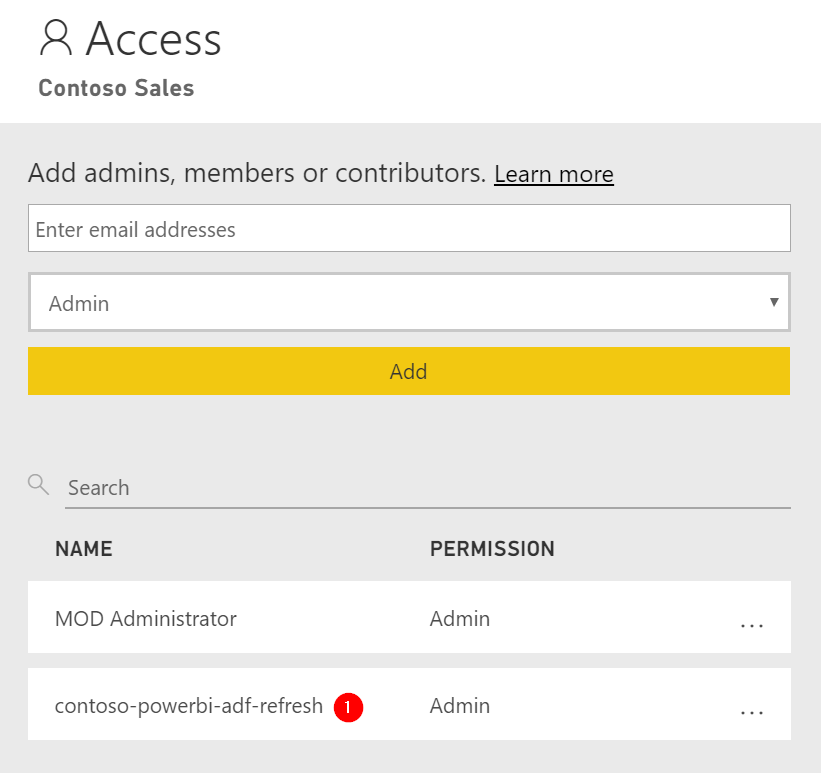

Got you V2 App Workspace and open the Access settings. In the search box, type the name of your App Registration / Service Principal, it’s the item with “AppID: ..” behind it’s name. Select it. Give it the Admin role, and click ‘Add’.

The result should be like this:

Also, this is the moment to double-check the dataset you want to refresh from Azure Data Factory. Credentials set? Can you successfully refresh the dataset manually?

Step #6 Create Azure Key Vault and add our secrets

If you already have an Azure Key Vault ready to use for this solution (and you have the OWNER role), you can of course skip creating a new one.

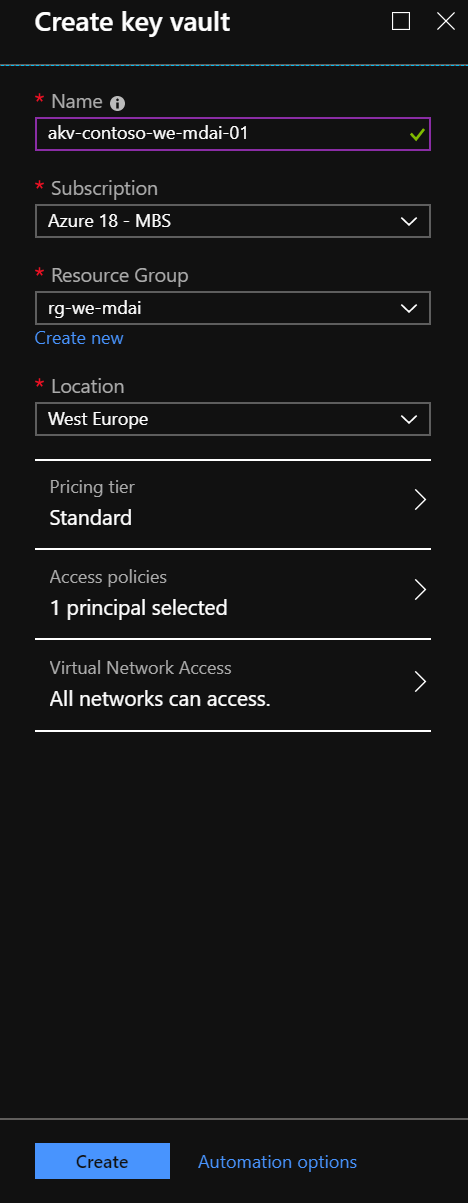

To create a new one, open the Azure Portal at https://portal.azure.com, and go the Resource Group where you want to create the Key Vault. Click ‘Add’ and choose the Azure Key Vault as type of resource. Give it a proper name and make sure you add it to the right Subscription, Resource Group, and Location. Click Create.

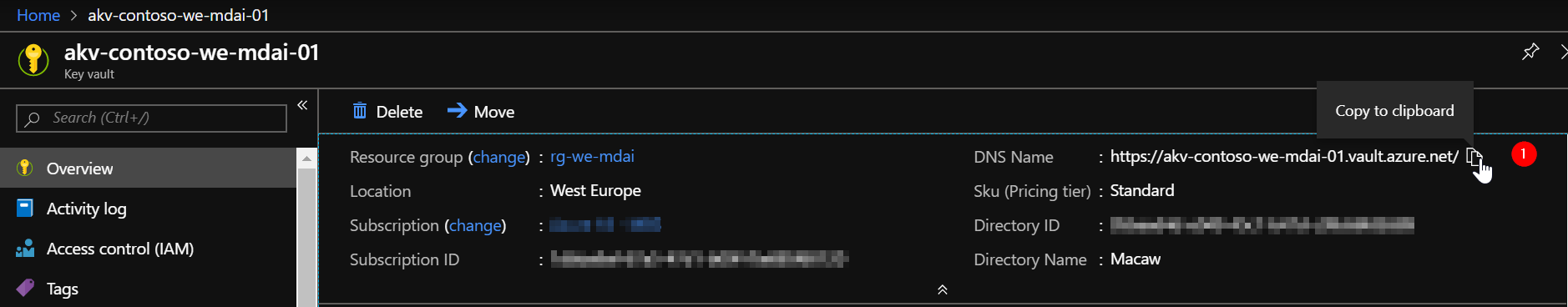

Navigate to the new resource. Copy the ‘DNS Name’ and save it, we need it later.

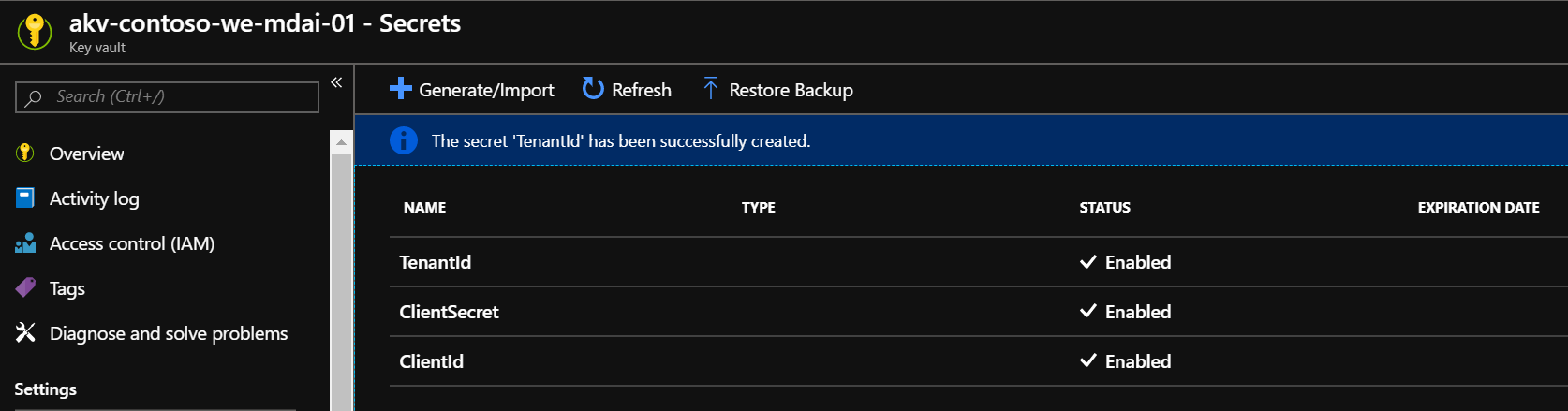

Let’s add our secrets. Open the Secrets section and click ‘Generate/Import’ to add the “ClientId”, “ClientSecret”, and “TenantId” you have written down in previous steps.

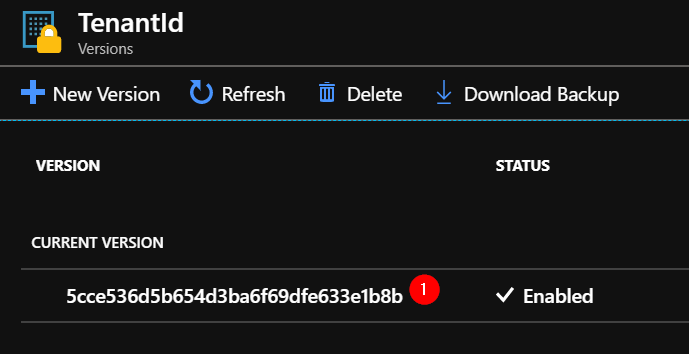

We need the name and current version of the secrets. Click on each secret to retrieve the guid of the current version.

Step #7 Create an Azure Data Factory

If you already have an Azure Data Factory ready to use for this solution, you can of course skip creating a new one.

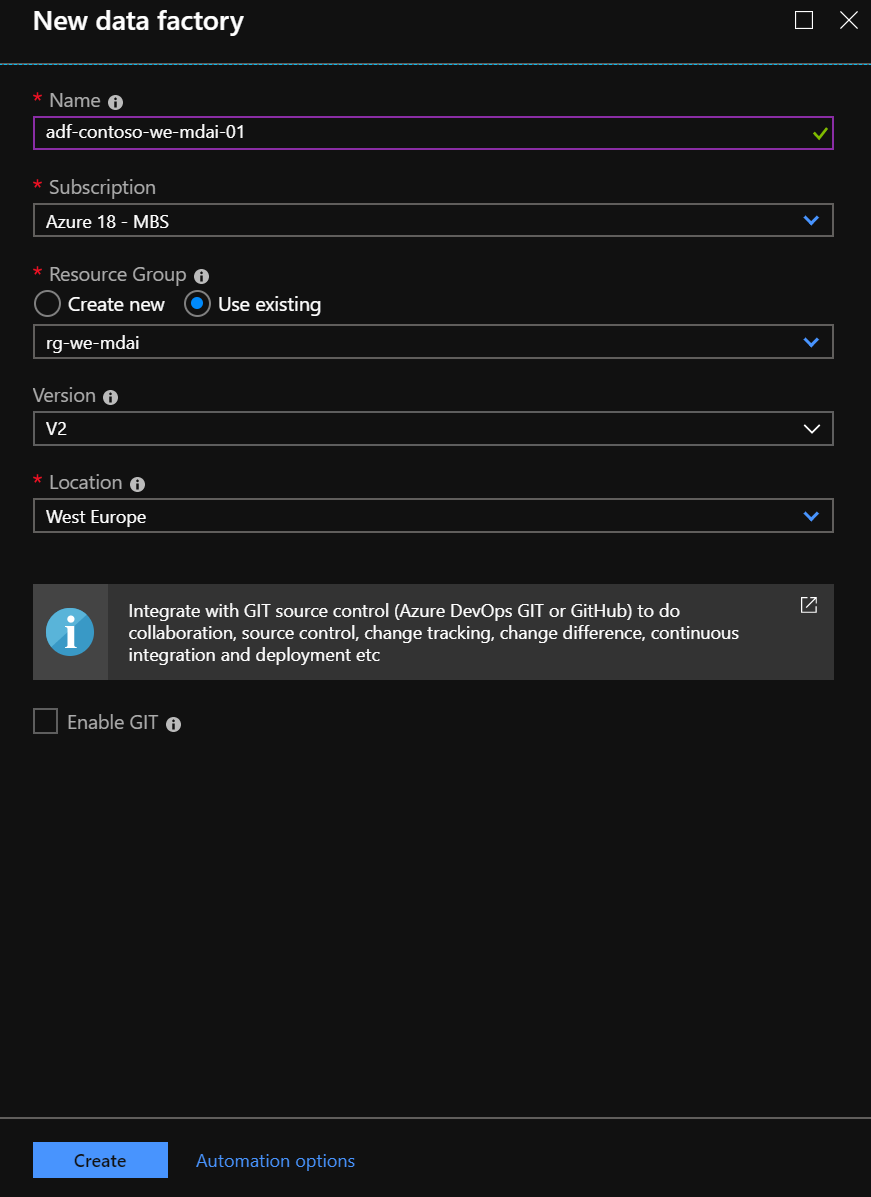

To create a new one, go to the Resource Group where you want to create it. Click ‘Add’ and choose Data Factory as type of resource. Give it a proper name and make sure you add it to the right Subscription, Resource Group, and Location. Of course we take Version V2. Click Create.

Step #8 Make sure Data Factory can authenticate to the Key Vault

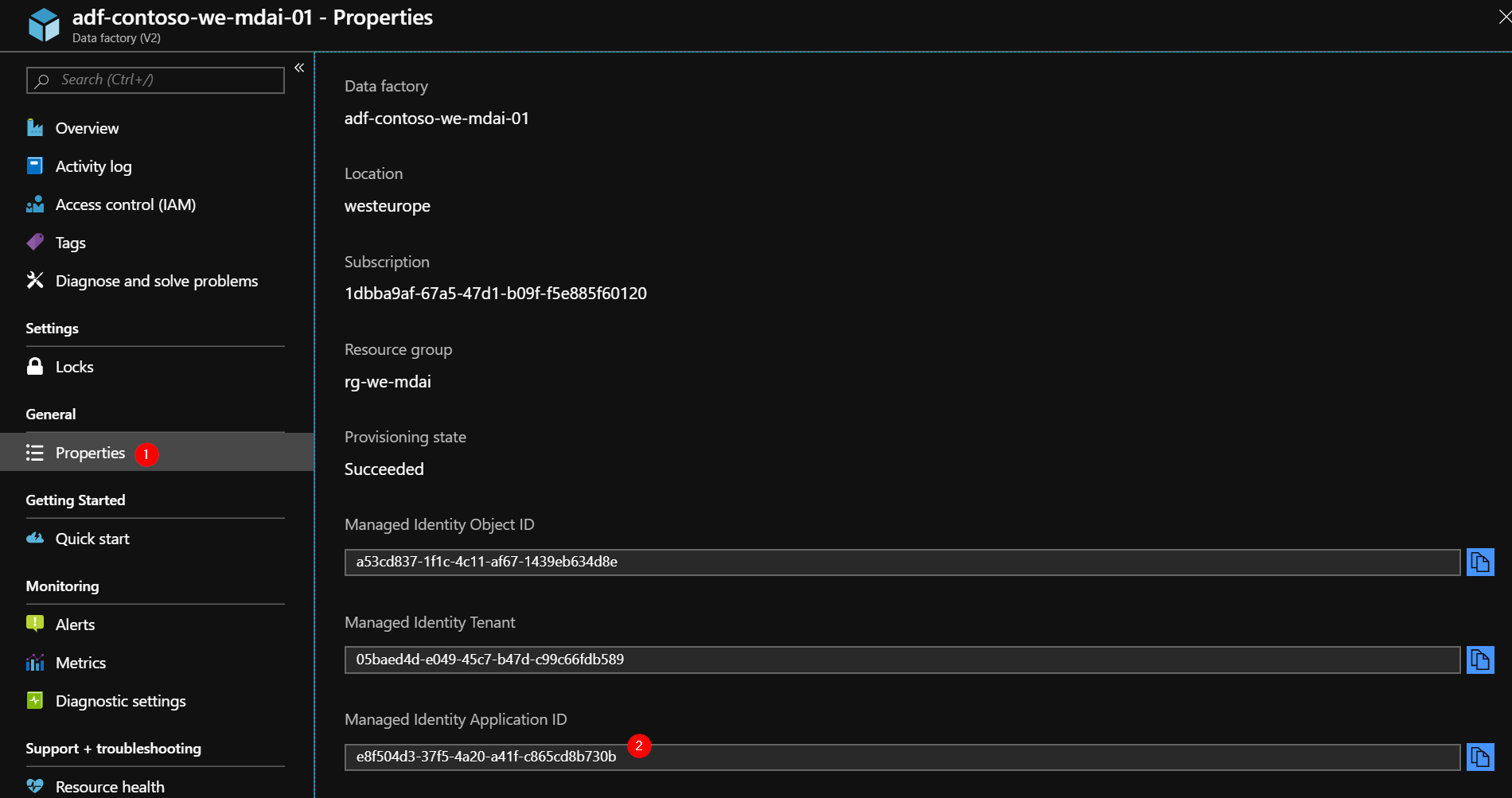

Open the properties section of the Azure Data Factory and copy the ‘Manged Identity Application ID’ value.

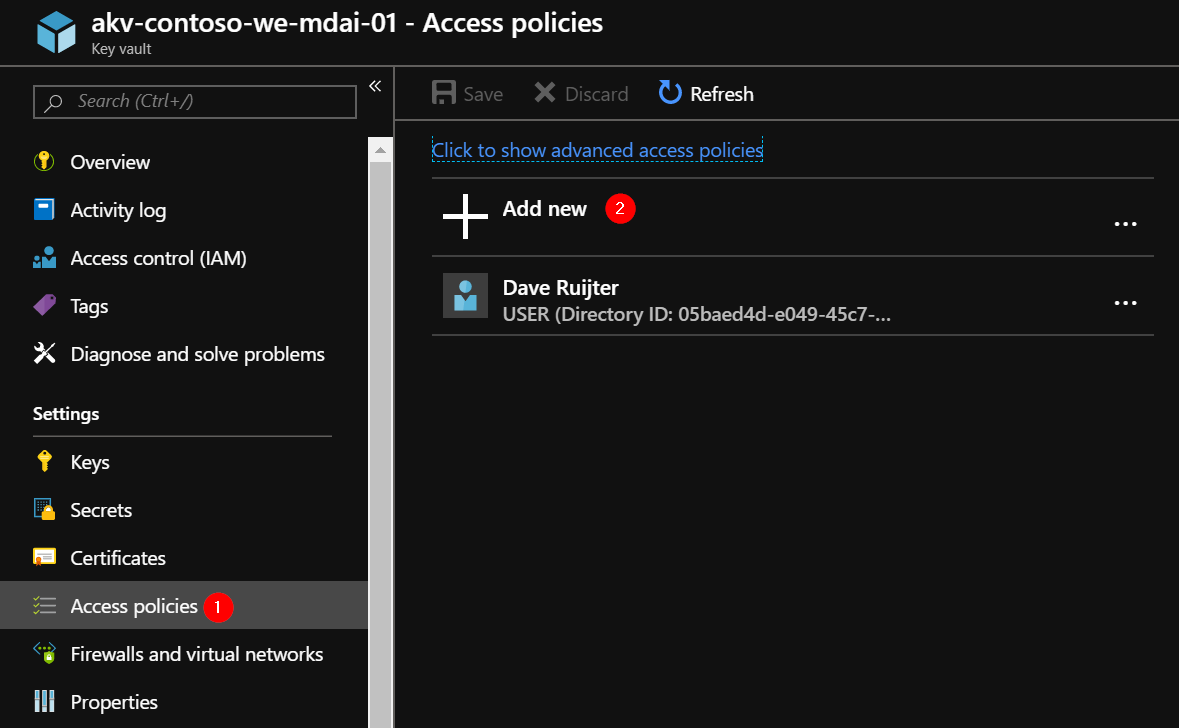

Go to your Azure Key Vault, and open the ‘Access policies’ section. Click ‘Add new’ to add a new access policy.

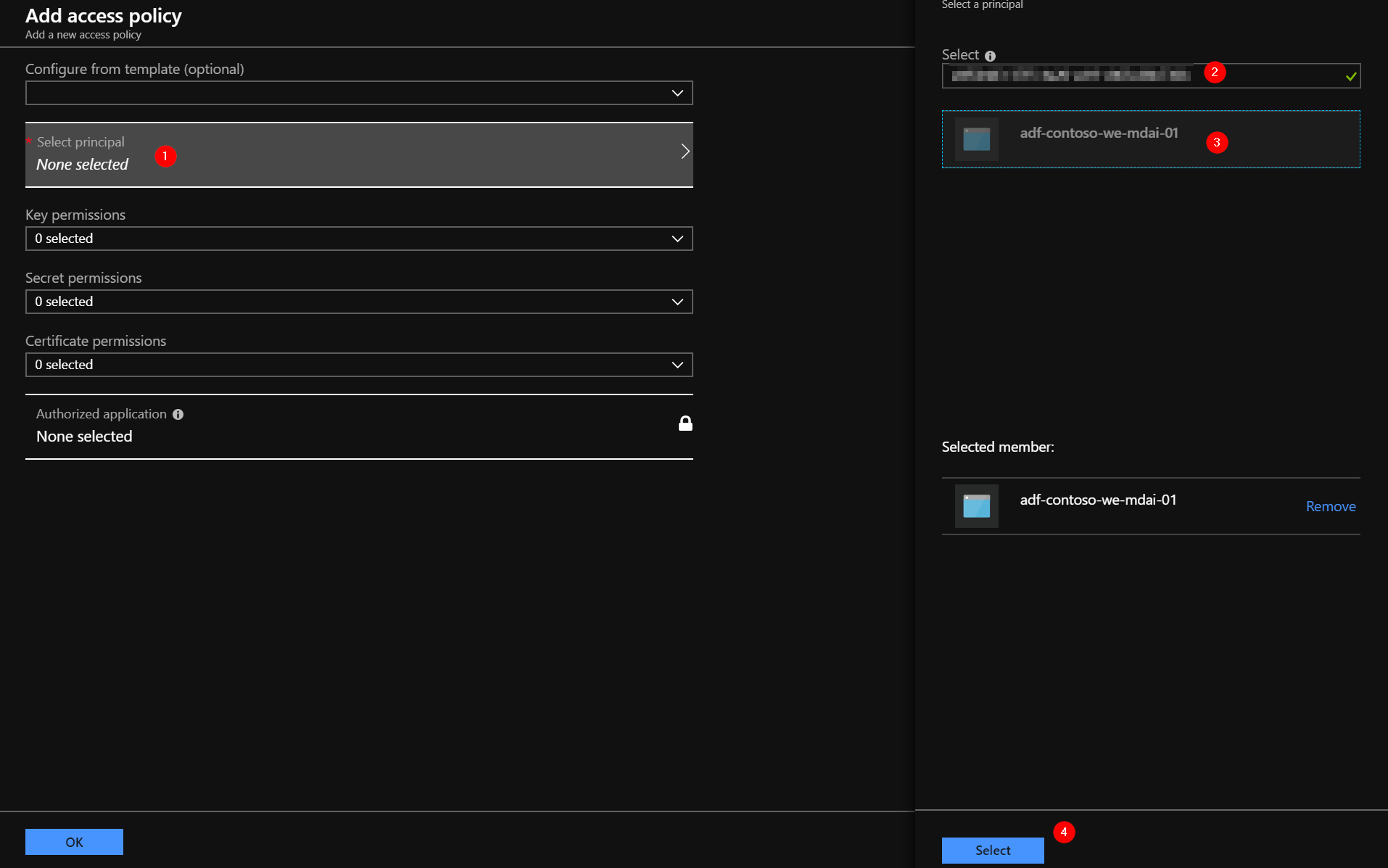

Click on ‘Select principal’, paste the Managed Identity Application ID of the Data Factory, and select it.

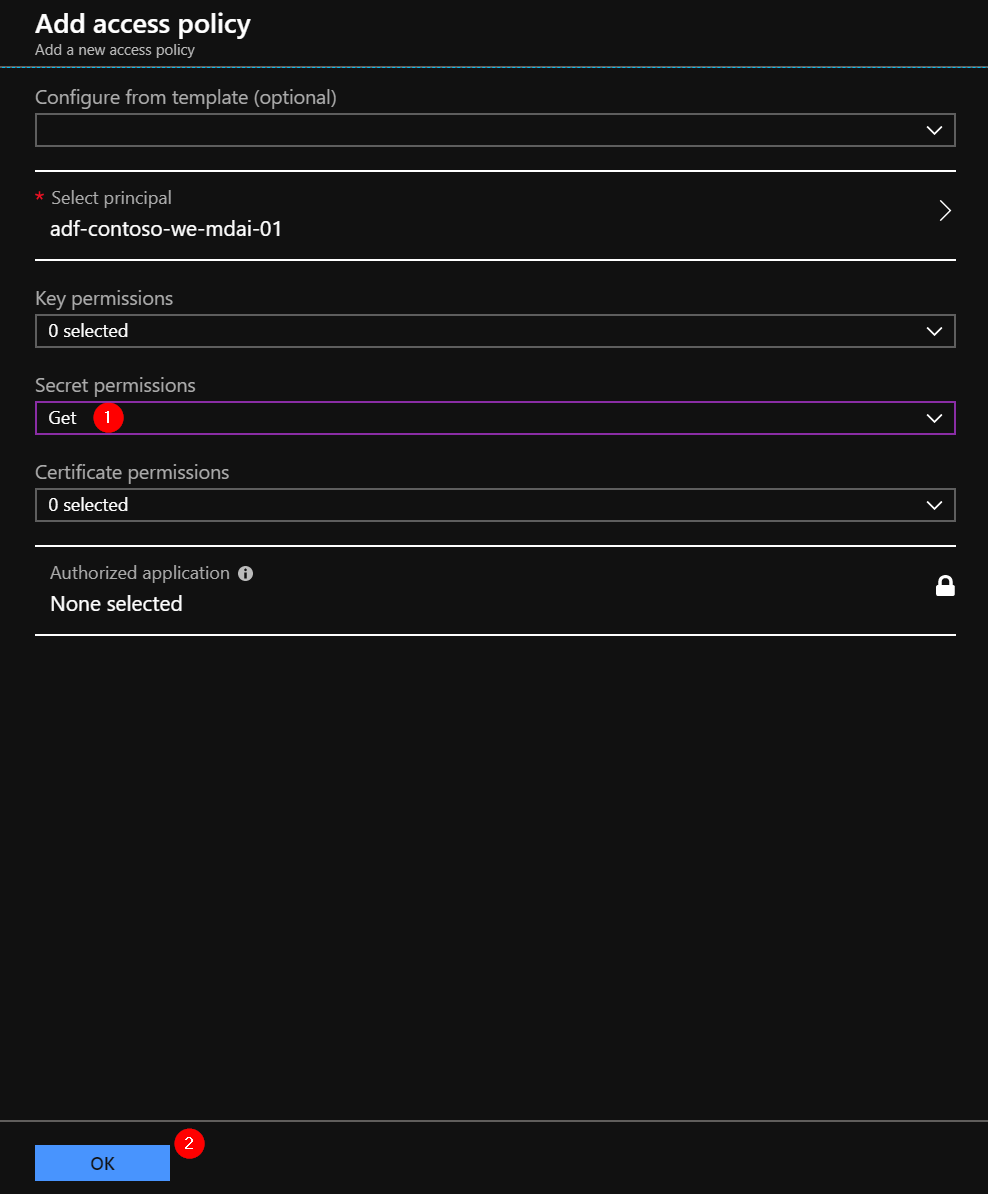

In the ‘Secret permissions’, select the ‘Get’ permission. Click OK. Then, click Save.

Step #9 Create the Azure Data Factory pipeline

Now, this is where the real magic is happening

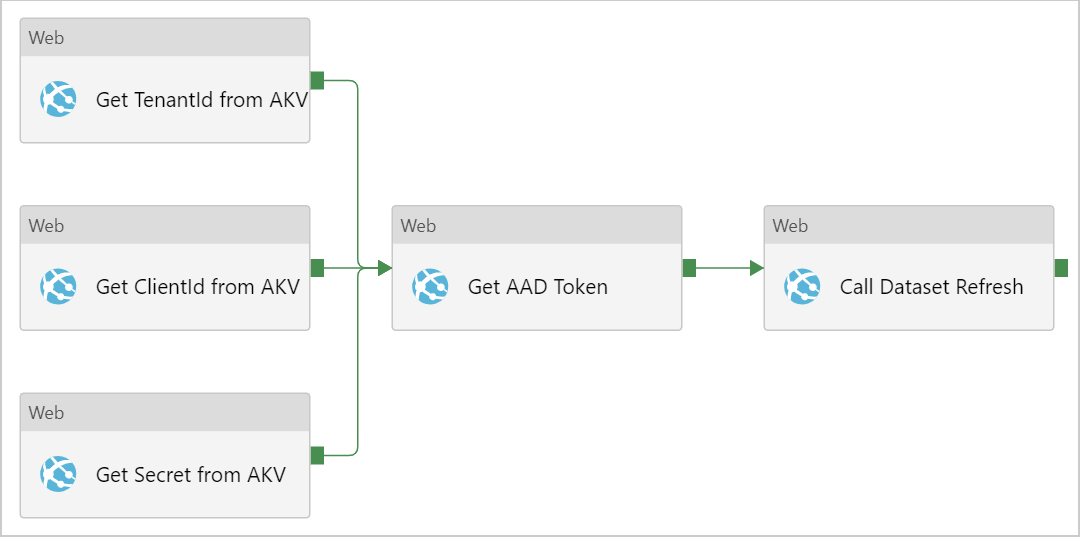

I’ve created a pipeline for you with all the activities needed. It looks like this:

The pipeline has 3 ‘stages’:

- Grab the secrets from the Azure Key Vault.

- Call the AAD authentication service and get the AAD token that we need to call the Power BI REST API

- Use the Power BI REST API to trigger the actual dataset refresh

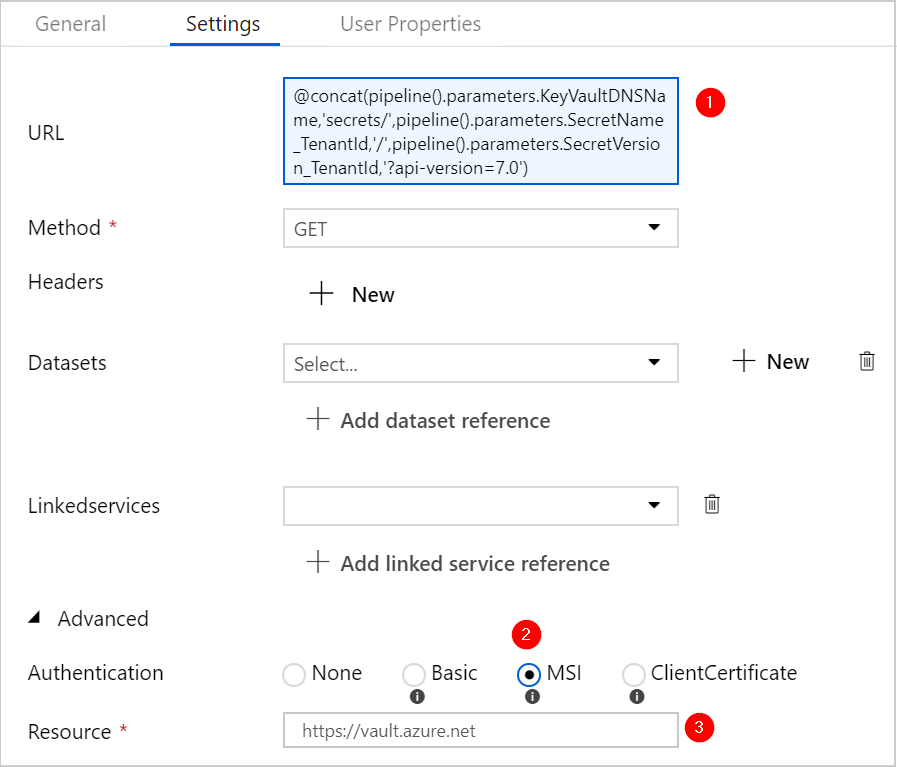

Stage 1: grab the secrets from the Azure Key Vault.

Important to note here, is that we use ‘MSI’ authentication. We will use the Managed Identity of the Data Factory to authenticate to the Key Vault (or actually its API). Remember we granted it access in an earlier step? It’s also imported to use “https://vault.azure.net” as the Resource value.

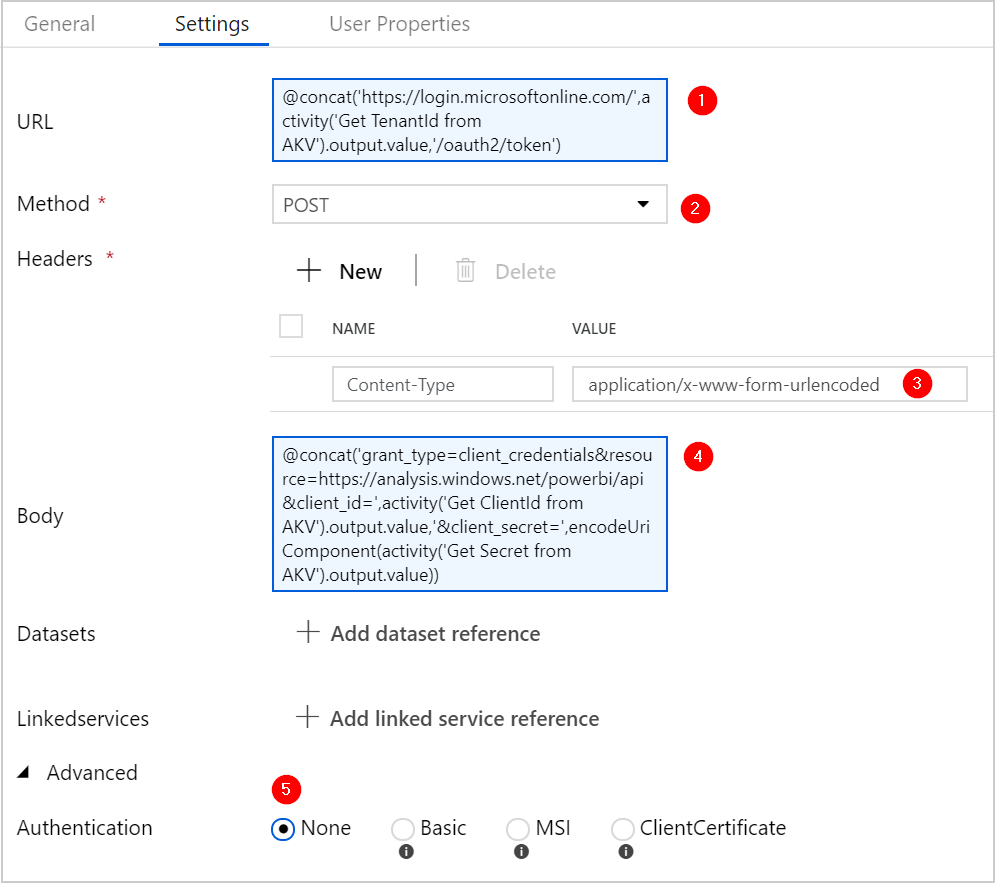

Stage 2: call the AAD authentication service and get the AAD token

Important to note here, is that we call the https://login.microsoftonline.com/<tenantid>/oath2/token URL with a POST method, and in the body of this call we send the following values:

- grant_type = client_credentials

- resource = https://analysis.windows.net/powerbi/api

- client_id = <the output of the previous Web Activity, to grab this from the Key Vault>

- client_secret = <the output of the previous Web Activity, to grab this from the Key Vault>

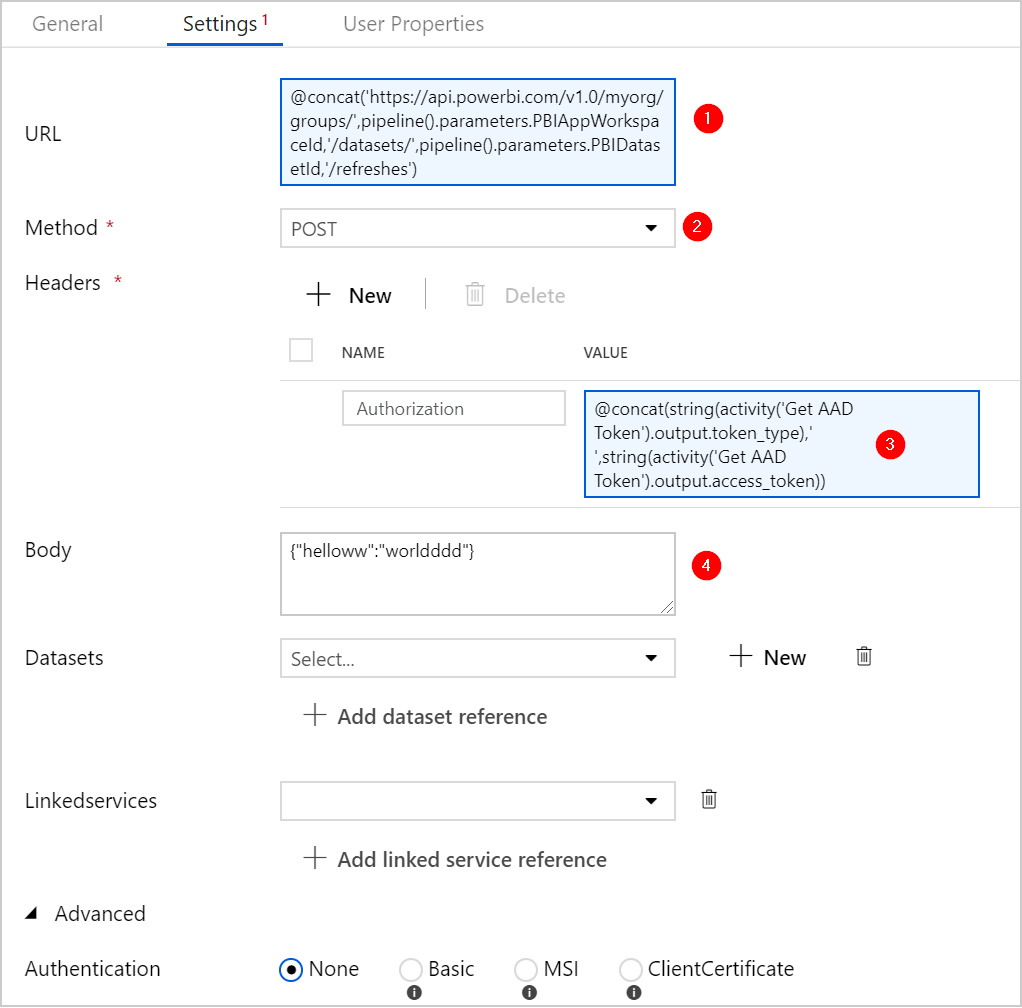

Stage 3: use the Power BI REST API to trigger the actual dataset refresh

Important to note here, is that we call the Power BI REST API (https://api.powerbi.com/v1.0) with a POST method, and as the Authorization header we send the AAD bearer token we retrieved in the previous Web Activity.

The Web Activity still requires us to have a value for the Body of the call.

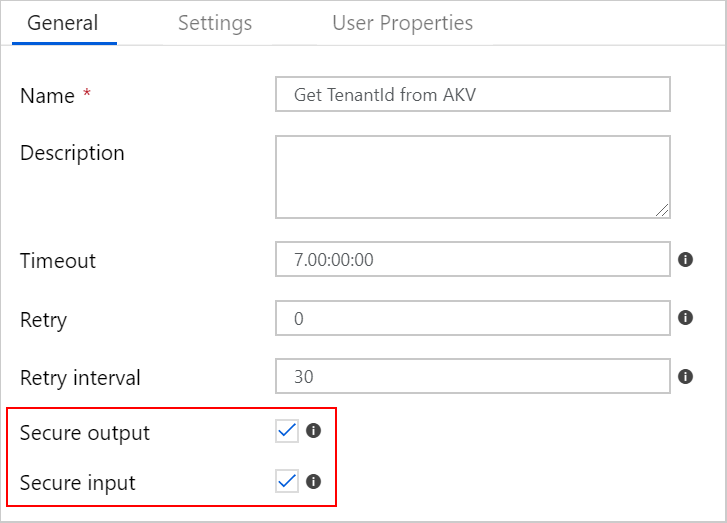

Key in the setup of this entire pipeline is that the Web Activities do not log any sensitive data when they are executed:

Download the json file here.

Add a new pipeline and open the Code view.

Replace the code with the content of the json file, and click Finish. Rename the pipeline (example: “pl_PBI_dataset_refresh”).

Publish the pipeline.

#10 Run it!

The idea behind this pipeline is to execute it from another pipeline, for instance the ‘master’ pipeline of the Datawarehouse. But, you can also trigger the pipeline standalone.

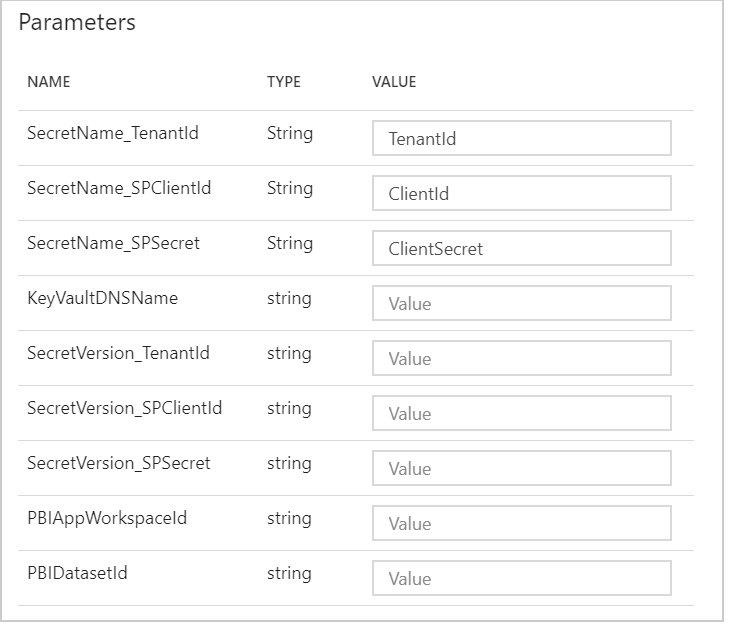

Either way, you will need to provide the necessary values.

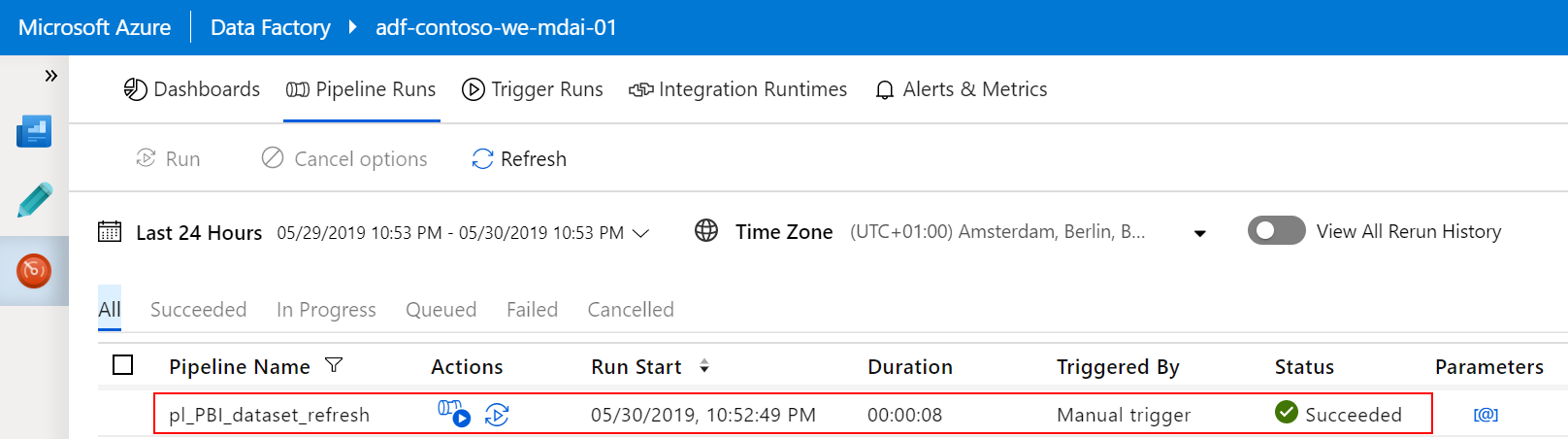

Probably you already have all of these parameter values at hand. Click Finish after you have filled in the form, and navigate to the Monitor view of the Data Factory to inspect your results: Status=Succeeded

It took in this case 8 seconds to call the API and wait for the dataset refresh to be completed (it might have failed though, but more on that later). Yes, for some reason the Web Activity executes a synchronous call (normally it’s in asynchronous mode). I like it.

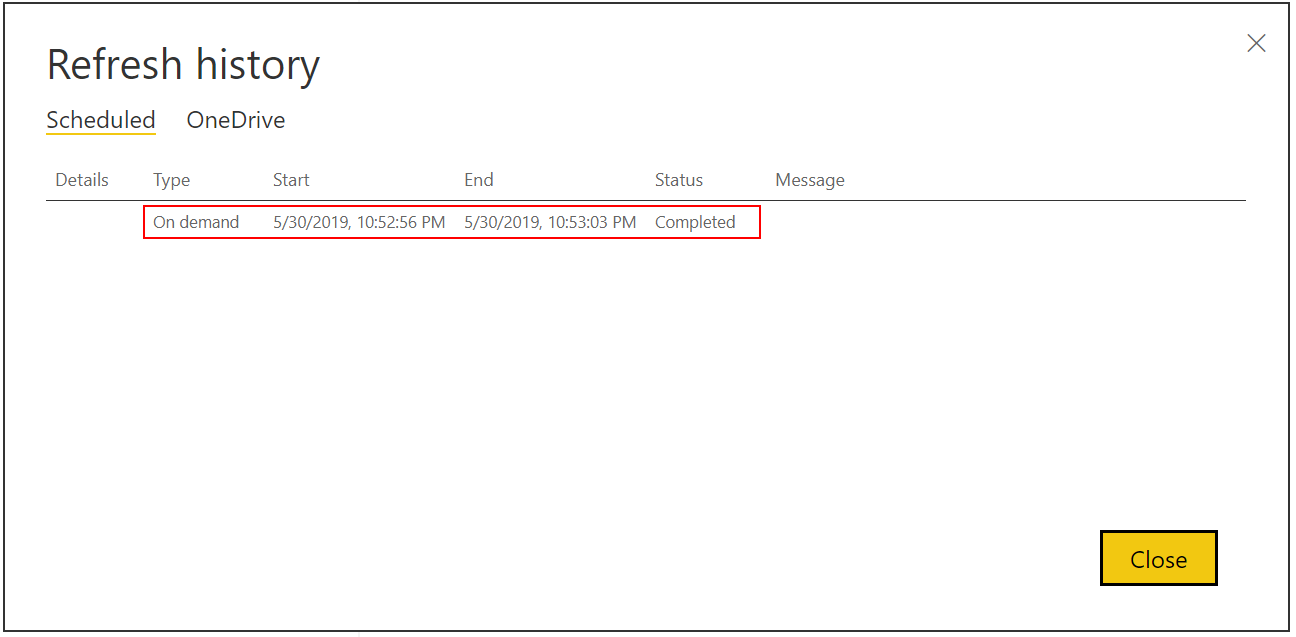

And the evidence in the Power BI dataset refresh history:

High-five the nearest person in the room

Conclusions

So we now have our solution ready and tested successfully. Let’s take a look at the initial requirements and see how we did.

Integrate in Azure Data Factory, and limit it to that (no Logic Apps, Functions, Workbooks)

Easy deployment using Azure DevOps release pipeline –> it’s super-easy to deploy Azure Data Factory pipelines using its git integration.

No personal account for authentication –> we’re leveraging a Service Principal! Make sure to use a separate Service Principal per environment, and only use it for a single cause.

Store sensitive data (secrets/passwords) in Azure Key Vault only (no secrets in code repo) –> our code is clean.

No sensitive data in logs, while executing the solution –> input & output secured.

Feel free to drop a comment below if you have any questions on this solution (or ping me on Twitter/LinkedIn).

Ideas for next steps

I think it would be great to add a Web Activity at the end, retrieving the dataset history to see if the refresh actually succeeded, and to notify the operators of this process.

I’d like to daisy-chain a Power BI dataflow refresh with a Power BI dataset refresh

Woud it be better if we use logic apps with PowerBI REST API Custom Connector using Swagger API file. I have developed the connector in Logic Apps and then executing Logic Apps from the Azure Data Factory Web Activity. This allows you to do almost all the Power BI activities supported using Power BI

Also an option! It depends on your situation which option is better. LogicApps can do the job as well. But, every extra type of resource you add to a solution architecture introduces new challenges (deployment via DevOps releases, IAM for devs/ops, etc.). In my experience the deployment to production of Logic Apps via Azure DevOps is not easy and requires you to rebuild parts. How did you take care of that?

Does the Custom Connector approach support the use of Service Principal for the authentication?

Anyway, it’s good to have multiple options .

.

Pingback: Last Week Reading (2019-06-02) | SQLPlayer

Pingback: Power BI Governance, Performance, email subscriptions and more... (June 3, 2019) | Guy in a Cube

Awesome post!

Thanks :)!

Pingback: How To Trigger A Power BI Dataset Refresh Using An Azure Data Factory Web Activity – Opowieści o SQLu, BI, życiu, innych używkach

Thanks for a great blog post! Very helpful. Do you know if it is possible to call Power BI rest api with managed identity of logic app or data factory instead of creating service principal and generating app key?

Thanks! Calling the API using Managed Identity is unfortunately not possible. You can check on ideas.powerbi.com if you van vote for an existing idea, or create your own idea!

Good article for PowerBI data refresh solution! But for PBIRS, not all users can use Azure AD. As a supplement, there’s another way to refresh data — using stored procedures:(https://www.linkedin.com/pulse/power-bi-rs-setting-data-refresh-trigger-mode-davis-zhang/)

This option is only relevant for PowerBI on Premise Report Server solution and not relevant for PowerBI Service for it we need to use rest apis

Hi.

The problem I have is when I want to assign “managed identity application id” from ADF into the access policy of KeyVault I see nothing, no selection is possible to choose for the Service Principal.

Not sure what I am doing wrong, or maybe I am lacking some privileges even though I am a Resource Owner.

Can I stop power bi refresh job, if my data load fails in database layer?

No unfortunately not.

We don’t see the Managed Identity Application ID of the Data Factory after creating the data factory.

Great article Dave! Helped us a lot.

Good to here!

Hi,

I have built it exactly same without AKV and hard code the values in URL

The pipeline works perfect when I debug and execute trigger manually.

It doesn’t work with a scheduled trigger.

Also, I tried adding it to master pipeline, though the rest of ETL pipelines execute successfully, however PBI Dataset refresh pipeline do not execute and not appear on the monitor window.

Can you suggest how to fix this?

Cheers!

RS

Hello Dave, thank you for posting this, it has been working well. I do get an error occaisionally, on the “call dataset refresh” step:

{“error”:{“message”:”The request was blocked by DoSP “}}

Just wondering if this was something you have run into before.

Thanks again for the great content.

Hi Joe, thanks! No that does not ring a bell.

we got that same error today as well. so we’re gonna increase the polling interval

I’m having the same issues last few days as well. This have worked fine until now. Do we need to raise a ticket to Microsoft?

Hi Marius, I think that’s a good idea, not much else to do from ‘our side’ to investigate this… I’d appreciate it very much if you can keep us posted on the progress of the ticket!

Same issue here!

Hi agian everyone.

So, I have been in touch with Microsoft, and this is actually a known issue.

This is what I got as an answer:

It is a known issue from Power BI end.

Product Team is still working on this issue. But I want to collect few traces from your end and share with them.

The cause of this issue is certain IP of ADF Azure integration runtime (IR) in your region is blocked due to PowerBI’s Dos Protection mechanism.

They have provided a workaround for this issue:

We could use Azure IR (Integration runtime) in other regions(outside of existing one) or use a selfhosted IR in web activity to the temporarily workaround this issue.

So, we had a selfhosted IR in our ADF, and when I switched to this it workes like a charm again. This you need to change in the “Call Dataset Refresh” component in the ADF pipeline.

Best regards

Marius

Hi Marius! Thanks for the reply and detailed information on this topic. This explanation and the workaround to use the self-hosted IR is excellent, thanks again for sharing.

Fantastic detailed post – thanks Dave. A conceptually simple process but a very laborious one to implement! Your pipeline and guidance notes were massive time savers for me.

I havent given permission to the tenant on the POWERBI app and

I get an error “Invoking Web Activity failed with HttpStatusCode – ‘Unauthorized’, message – ”, in the CALL DATASET REFRESH WEB ACTIVITY.

Could this error be caused because no permission has been given to the tenant ?.

Thank you for this amazing blog

Hi Rachael, .

.

Yes, that could very well be the issue.

Other times I have seen the 403 unauthorized message was when the user or service principal was not part of the workspace (or only had the viewer role), or when the workspace was assigned to an Azure Power BI Embedded Premium Capacity that was in the suspended state.

Unfortunately, the 403 error can mean a LOT of different things

Hi Rachael,

I am facing the similar issue. Can you please what actions you took for resolving this issue

Hello Racheal,

I have given the required permission in PowerBi. Still i am getting the same error. Am i missing out any other thing?

It stopped working for us too all of a sudden, try change the authentication method of the last web activity calling PBI service to “None” and see if that helps. Something has changed it looks like.

Thx for this great post. It was very useful for us! Also very complete! Please contineu….

Great post , Could you please suggest how to do the same using SPN certificate instead of client secret , I am able to extract the certificate of SPN from key value using web activity , bit not sure what value from the certificate i have to use below

@concat(‘grant_type=client_credentials&client_id=’,,’&client_assertion=’,activity(‘Web1′).output.cer,’&client_assertion_type=’,’urn:ietf:params:oauth:client-assertion-type:jwt-bearer’,’&resource=’,’https://analysis.windows.net/powerbi/api’)

Hi, I don’t know how to do that. Never had a use case with an SPN certificate.

Thanks for the post. There is a typo at step 9 stage 2

https://login.microsoftonline.com//OATH2/token.

We lost some time trying to understand what’s wrong

Hi Dave,

Thanks for sharing the JSON code.

The pipeline was executing successfully, but the dataset was not getting refreshed.

The JSON code helped a lot. The reason the dataset was not getting refreshed was related to the “Authorization” header within the “Call dataset refresh” activity.

Actual Header : @concat(string(activity(‘Get AAD Token’).output.token_type),’ ‘,string(activity(‘Get AAD Token’).output.access_token))

Incorrect Header : @concat(string(activity(‘Get AAD Token’).output.token_type),”,string(activity(‘Get AAD Token’).output.access_token))

There is a space between the token_type & access_token, but mine was not having.

Hope it helps others.

Thanks Pravin for sharing the information!

Great solution – thanks very much for giving such clear steps to follow, it made it easy to implement!

Hi,

Thanks for this great post.

I have 2 environments (dev & pro) with 2 KeyVault.

Is there a way to dynamically set the good KeyVaultDNSName in my pipeline ?

Thank you

I recommend the scripts from SQLPlayer to do this!

https://sqlplayer.net/azure-data-factory/

Hello, Dave.

Thank you for the excellent article.

I’m trying to receive the report refresh details from PowerBI instead of refreshing the PowerBI report.

In the last activity, I used the Get method and passed the following groupid and dataset details in URL : https://api.powerbi.com/v1.0/myorg/groups/groupid/datasets/datasertid/refresh

I took the identical steps as you, but I’m getting a 401 Unauthorized error.

Could you please help or suggest what i am missing.

I wonder if you can help me with the error I got?

Operation on target Call dataset refresh failed: {“error”:{“code”:”ItemNotFound”,”message”:”Dataset 9f093480-80f5-48d4-ba20-8ada2ef23e78 is not found! please verify datasetId is correct and user have sufficient permissions.”}}

I have the same problem Hany, have you been able to solve it?

So, my issue was that the SPN wasn’t added as an Admin to the PBI workspace. Now it is and everything works.

Great you were able to solve it, and thanks for sharing!

This is great post Dave and helped us in setting up the flow.

KUDOS.

Great post.

Is there any way to refresh all datasets in the given Workspace? Maybe create a GET call to recieve all DatesetID’s and iterate over the given DatasetID’s?

HI Dave,

Great post. Unfortunately, I am getting the following error when I refresh the dataset:

Invoking Web Activity failed with HttpStatusCode – ‘401 : Unauthorized’, message – ”

I have tried everything but nothing seems to work for me. I can see that I am successfully getting a token output but when I use it to refresh the PowerBI dataset it fails. Is there any workaround ?

I have granted Admin access to my workspace and made all relevant settings using AdminPortal of BI service.

HI Dave,

Great post. Unfortunately, I am getting the following error when I refresh the dataset:

Invoking Web Activity failed with ‘401 : Unauthorized’, message – ”

I have tried everything but nothing seems to work for me. I can see that I am successfully getting a token output but when I use it to refresh the PowerBI dataset it fails. Is there any workaround ?

I have granted Admin access to my workspace and made all relevant settings using AdminPortal of BI service.

Hi,

Great post. I cannot use token to refresh the Power BI dataset. I get unauthorized 401 error message. I have followed your instructions but it is not working. Any guidance or help will be really appreciated